I’m very pleased to announce that my thriller, A Semblance of Control, was released by by Down&Out Books. This is my fourth title with them, following Dark as Night, Killer's Coda, and Breaking Character. Here's a quick pitch for Semblance: "To save his kidnapped girlfriend, Jake must defeat a plot to kill his estranged brother, the Mayor of … Continue reading A Semblance of Control is on bookshelves now!

Breaking Character released by Down&Out Books

I'm very pleased to announce that my thriller, Breaking Character, has been published by Down&Out Books. This book is the result of a great deal of work, writing and re-writing, and not a little heartache. But I'm very excited to be publishing with the good people at Down&Out. They do great work, and I'm more … Continue reading Breaking Character released by Down&Out Books

Dark as Night Chapter One Download

All, as you may know, my novel Dark as Night is being published next week by the good people at Down & Out Books. It is available for pre-order here. In anticipation of its release, I'm making available the first chapter as a pdf download. Click on the link below. Enjoy! dark-as-night-chapter-one-2

Dark as Night Release by Down and Out Books

Very happy to announce that my novel Dark as Night is being published by the good people at Down and Out books! It's available now for pre-order, released in February. https://downandoutbooks.com/bookstore/conard-dark-night/

SCIENCE, MIND, AND GOD: Recap

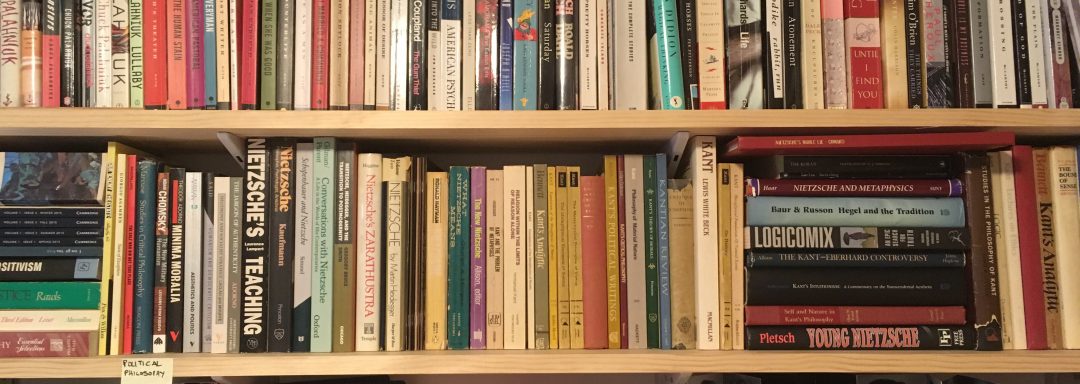

I’m behind on my blog-posting. So, while I struggle to get caught up, I thought I’d offer a recap of what’s come so far in this “Science, Mind, and God” series. I hope to post the next installment on Descartes soon, within the next few weeks. Part I: The New Atheists The series was motivated … Continue reading SCIENCE, MIND, AND GOD: Recap

Science, Mind, and God. Part IV: Plato

Not long ago I developed and taught a class on the so-called New Atheists, a group of thinkers who put out books around the same time (2004 – 2007) arguing against Western religion. I only taught the class twice, and two unexpected things happened in the course of my delving deeply into these authors and … Continue reading Science, Mind, and God. Part IV: Plato

SCIENCE, MIND, AND GOD. PART III: THE GREEKS DISCOVER THE COSMOS

Not long ago I developed and taught a class on the so-called New Atheists, a group of thinkers who put out books around the same time (2004 – 2007) arguing against Western religion. I only taught the class twice, and two unexpected things happened in the course of my delving deeply into these authors and … Continue reading SCIENCE, MIND, AND GOD. PART III: THE GREEKS DISCOVER THE COSMOS

SCIENCE, MIND, AND GOD. PART II: THE (IL)LIBERAL COLLEGE STUDENT

Not long ago I developed and taught a class on the so-called New Atheists, a group of thinkers who put out books around the same time (2004 – 2007) arguing against Western religion. As a long-time devotee of Nietzsche, I was intrigued by these books and sympathetic towards many of the arguments they contained. I’ll … Continue reading SCIENCE, MIND, AND GOD. PART II: THE (IL)LIBERAL COLLEGE STUDENT

Ten Day Facebook Film Challenge

Recently I was tagged on a “Ten Day Film Challenge” on Facebook, in which I posted an image from a film that has impacted me but with no explanation (one movie for each of ten successive days). Not much of a challenge, really, and I have to confess that I didn’t give a lot of … Continue reading Ten Day Facebook Film Challenge

Science, Mind, and God. Part I: The New Atheists

Not long ago I developed and taught a class on the so-called New Atheists, a group of thinkers who put out books around the same time (2004 – 2007) arguing against Western religion. As a long-time devotee of Nietzsche, I was intrigued by these books and sympathetic towards many of the arguments they contained. I’ll … Continue reading Science, Mind, and God. Part I: The New Atheists